Nvidia CEO Jensen Huang on Thursday clarified remarks he made in January, when he expressed skepticism about the viability of useful quantum computers reaching the market within the next 15 years.

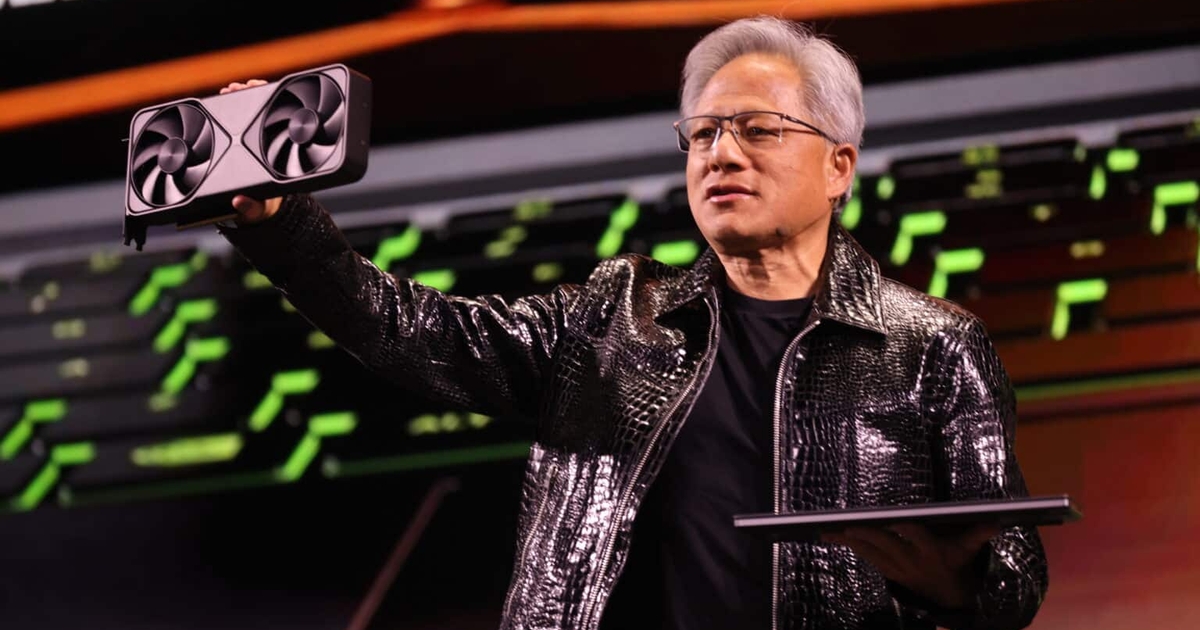

Speaking at Nvidia’s “Quantum Day” event, which was part of the company’s annual GTC Conference, Huang acknowledged that his previous comments did not come across as intended.

“This is the first event in history where a company CEO invites all of the guests to explain why he was wrong,” Huang said.

Back in January, Huang’s remarks sent quantum computing stocks tumbling after he stated that 15 years was “on the early side” for when the technology might become practical. At the time, he suggested that a 20-year timeline was something “a whole bunch of us would believe.”

On Thursday, in his opening remarks, Huang drew parallels between the current state of quantum computing and Nvidia’s early struggles. He noted that it took Nvidia over two decades to develop its software and hardware business.

He also expressed surprise that his initial comments had influenced the stock market, joking that he had not realized some quantum computing companies were publicly traded.

“How could a quantum computer company be public?” Huang said.

The event featured panels with representatives from 12 quantum computing companies and startups, signaling a form of reconciliation between Nvidia, which specializes in conventional computing, and the quantum computing sector. Huang’s earlier remarks had prompted pushback from multiple quantum industry executives.

A third panel included representatives from Microsoft and Amazon Web Services—two of Nvidia’s key customers—who are also investing in quantum technology.

Nvidia has its own reasons for embracing quantum computing. While quantum computers are still in development, much of the research being conducted relies on powerful classical computing simulators—systems Nvidia specializes in.

Additionally, some experts believe that quantum computers may eventually require traditional computing infrastructure to operate efficiently. Nvidia is working on technology and software to integrate graphics processing units (GPUs) with quantum chips.

“Of course, quantum computing has the potential and all of our hopes that it will deliver extraordinary impact,” Huang said on Thursday. “But the technology is insanely complicated.”

As part of its commitment to quantum research, Nvidia announced plans this week to build a research center in Boston. The facility will enable quantum computing companies to collaborate with researchers from Harvard and the Massachusetts Institute of Technology. The center will be equipped with several racks of Nvidia’s Blackwell AI servers.

Quantum computing has been a theoretical pursuit since the 1980s when California Institute of Technology professor Richard Feynman first introduced the concept.

Unlike classical computers, which process data in binary (0s and 1s), quantum computers use qubits, which operate based on probability. Experts anticipate that quantum technology will eventually solve complex problems with vast numbers of possible solutions, such as codebreaking, optimizing delivery routes, and simulating chemistry or weather patterns.

Despite ongoing advancements, no quantum computer has yet outperformed a classical computer in solving a practical, real-world problem. However, Google claimed late last year to have made progress in quantum error correction.

During the panel, one question touched on whether quantum computing could pose a threat to companies like Nvidia, which rely on transistor-based computing.

“A long time ago, somebody asked me, ‘So what’s accelerated computing good for?’” Huang said, referring to the GPU-driven computing that Nvidia specializes in.

“I said, a long time ago, because I was wrong, this is going to replace computers,” he continued. “This is going to be the way computing is done, and everything, everything is going to be better. And it turned out I was wrong.”